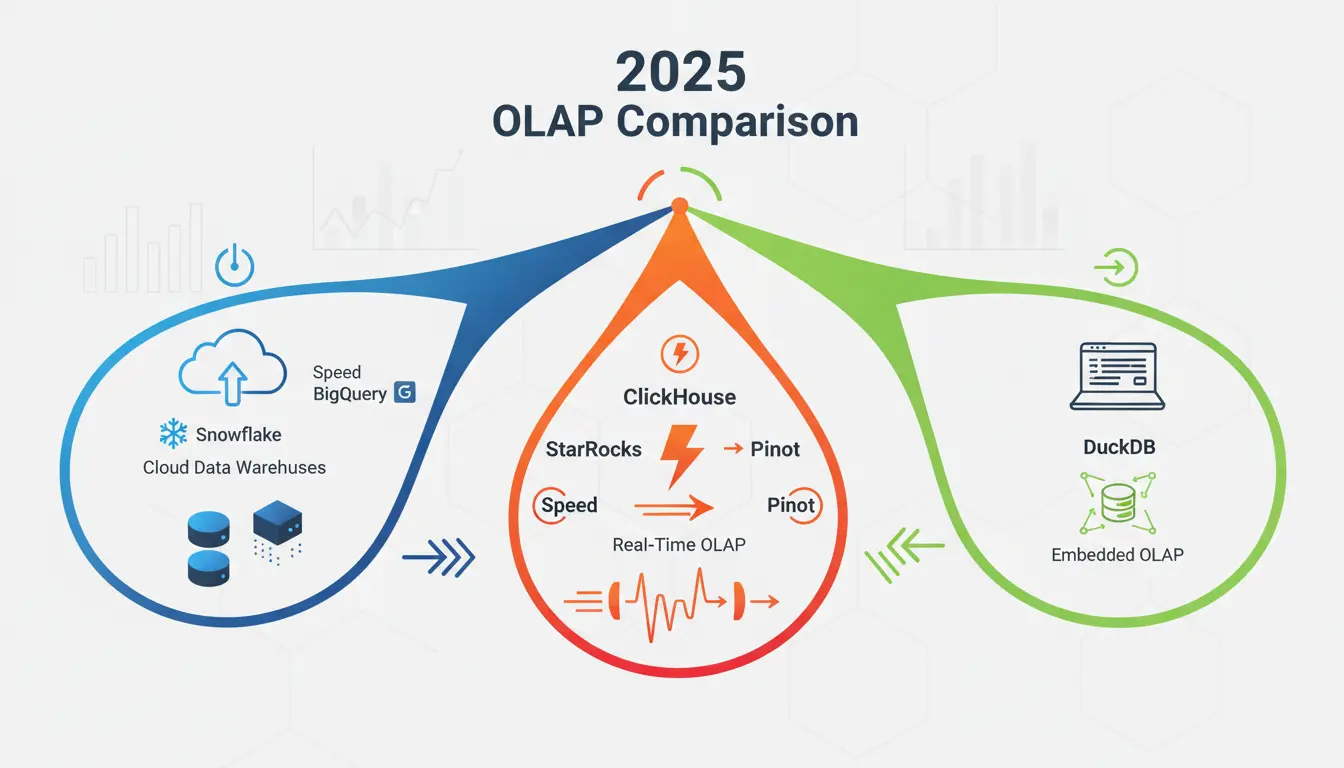

The OLAP database market isn’t a single battlefield anymore. In 2024-2025, comparing “ClickHouse vs. Snowflake” is like comparing a race car to a cargo ship—they’re built for completely different purposes. The real question isn’t which database is better, but which archetype matches your workload.

After analyzing seven leading OLAP solutions, three distinct architectural patterns emerge. Choosing the wrong one is the most expensive mistake you can make in modern data architecture.

The Three Archetypes That Matter

Cloud Data Warehouses: Snowflake and BigQuery

These are the established giants—mature, petabyte-scale, fully-managed platforms built on separated storage and compute. Think of them as the “enterprise standard” for internal Business Intelligence and complex batch analytics.

Snowflake’s Architecture: Three independently scalable layers—storage, compute (Virtual Warehouses), and cloud services. Different teams can run isolated workloads on separate warehouses, preventing resource conflicts. The trade-off? Query latency is measured in seconds, not milliseconds.

BigQuery’s Advantage: Completely serverless. No infrastructure management whatsoever. The Dremel engine allocates compute on-demand through “slots.” For organizations deeply invested in Google Cloud Platform (GCP), the native integration with Dataflow, Pub/Sub, and Looker is unbeatable.

The Acceleration Layer: Both platforms are now adding speed boosters. Snowflake’s “Interactive Tables” and BigQuery’s “BI Engine” are essentially in-memory caches designed to compete with real-time OLAP systems for low-latency queries.

Real-Time OLAP Engines: The Speed Demons

ClickHouse, Apache Druid, Apache Pinot, and StarRocks belong here. These distributed database servers are built for two things: ingesting massive streams of data in real-time and serving ultra-low-latency queries. Their primary job isn’t internal reporting—it’s powering applications.

ClickHouse: The Single-Table Champion

ClickHouse uses a vectorized execution engine that processes data in columnar blocks, maximizing CPU efficiency. It’s the fastest system for simple aggregations on large, single tables. The MergeTree storage engine continuously merges smaller data parts into optimized chunks in the background.

The catch? ClickHouse traditionally struggled with complex joins and high concurrency. While recent improvements have helped, it’s still not designed for join-heavy queries or serving thousands of concurrent users. Best for: internal observability, log analytics, and APM dashboards where you control the query load.

StarRocks: The Join Master

StarRocks stands out with its sophisticated Cost-Based Optimizer (CBO). While ClickHouse forces you to denormalize data into flat tables, StarRocks can efficiently handle complex, multi-table joins. Benchmarks show it outperforming ClickHouse by 1.87x on Star Schema queries and 3-5x on TPC-H workloads.

Its “Primary Key model” enables efficient real-time updates and deletes—a critical feature for handling Change Data Capture (CDC) streams. StarRocks also maintains stable sub-second P95 latency under 500 concurrent users, where ClickHouse’s performance degrades significantly.

Apache Pinot: The P99 Latency King

Pinot was purpose-built at LinkedIn for one thing: serving ultra-low-latency analytics to millions of external users. Its secret weapon is its rich indexing system—inverted indexes, Star-Tree indexes (pre-aggregated data structures), range indexes, and specialized JSON/geospatial indexes.

By front-loading computational work at ingestion time, Pinot can answer aggregation queries in milliseconds by reading pre-computed values instead of scanning raw data. It handles hundreds of thousands of queries per second while maintaining P99 latencies under 100 milliseconds. Perfect for user-facing dashboards like Uber Eats’ restaurant manager interface.

Apache Druid: The Time-Series Specialist

Druid excels at time-series data with its segment-based architecture optimized for time-based filtering. It provides millisecond-level data freshness from Kafka streams.

The downside? Druid has a disaggregated microservices architecture requiring you to manage five different node types (Brokers, Coordinators, Overlords, Historicals, MiddleManagers). This operational complexity is severe. Worse, Druid has no UPDATE or DELETE statements—to modify data, you must perform batch re-ingestion jobs that overwrite entire time-based segments.

Embedded OLAP: DuckDB

DuckDB is the disruption. It’s not a server—it’s an in-process library you link into your application. Think “SQLite for analytics.”

This architecture shift is powerful. DuckDB runs complex analytical SQL queries directly on Parquet files, CSVs, and even Python Pandas DataFrames without ingestion. It performs aggregations and joins orders of magnitude faster than in-memory dataframe libraries.

The use case? DuckDB isn’t competing with Snowflake or ClickHouse in production servers. It’s replacing Pandas for local data science, powering analytics within single applications, and serving as a high-performance query engine for data lakes. Zero infrastructure, zero operational overhead.

The Critical Performance Differences

Data Freshness: Milliseconds vs. Seconds

True Stream-Native (Milliseconds): Pinot and Druid ingest events from Kafka row-by-row and make them queryable in milliseconds.

Micro-Batch Model (Seconds): ClickHouse isn’t stream-native—it uses micro-batching. Data is only queryable after seconds to minutes, depending on batch size. Head-to-head comparisons show a visible “plateau” in data freshness.

Near-Real-Time (Seconds): StarRocks provides reliable second-level data ingestion. The cloud data warehouses (Snowflake’s Snowpipe Streaming, BigQuery’s streaming inserts) have reduced their latency from minutes to seconds, but can’t match the millisecond freshness of Pinot or Druid.

The Concurrency Crisis

For user-facing applications, 99th-percentile (P99) latency is everything. Average latency is a vanity metric. If your P99 is high, 1% of your users—often your most valuable, high-traffic users—are experiencing a broken system.

Pinot’s Dominance: Architectural design for strict P99 SLAs under extreme load. Replica Group Routing and partition-aware routing minimize the “slowest-node” problem.

StarRocks’ Strength: Handles tens of thousands of queries per second with stable sub-second P95 latency at 500 concurrent users.

ClickHouse’s Achilles’ Heel: Performance degrades catastrophically under concurrent load. A real-world test on a 32-core machine showed a query that ran in 383ms in isolation slowing to 10 seconds with just 30 parallel users. DiDi’s benchmark limits ClickHouse to hundreds of QPS, versus StarRocks’ tens of thousands.

The Mutation Revolution

The old OLAP trade-off—immutability for speed—is dead. Modern applications need efficient updates for CDC, user corrections, and privacy regulations like GDPR.

Gold Standard: Snowflake and BigQuery provide mature, full DML support (INSERT, UPDATE, DELETE, MERGE) with no performance penalties.

StarRocks & Pinot: Native upsert support designed for real-time CDC streams. Low-impact, high-throughput mutations.

ClickHouse’s 2024 Game-Changer: “Lightweight updates” now write tiny “patch parts” instead of rewriting entire data parts. Benchmarks show 1,600x faster performance (60 milliseconds vs. 100 seconds). This makes ClickHouse newly viable for CDC use cases.

Druid’s Fatal Flaw: No UPDATE or DELETE statements. Period. You must perform batch re-ingestion jobs to modify data. This makes Druid fundamentally unsuitable for any use case requiring frequent, granular updates.

The Lakehouse Integration Race

The 2025 paradigm is querying open-format files (Apache Iceberg, Parquet) directly in cloud storage without mandatory ingestion.

First-Class Winners: StarRocks 4.0 introduced “first-class Apache Iceberg support” with optimized metadata parsing. Pinot (via StarTree) became the first system to enable low-latency serving directly on data lakes by applying its indexes to Parquet files in S3. DuckDB excels at querying Parquet and S3-hosted files directly.

Weaker Integration: ClickHouse primarily uses table functions like s3() for federated access—less seamless than native catalog integration. Druid is fundamentally built around owning data in its native segment format.

Your Decision Framework

For Startups (Speed, Flexibility, Low Overhead)

Start Local: DuckDB for all analytical projects and data science workflows. Zero infrastructure, powerful SQL engine, replaces slow Pandas scripts.

First Scalable Server: Managed ClickHouse or StarRocks. Never self-host—operational complexity will consume your engineering resources.

- Choose ClickHouse if: Append-only data (logs, events), simple aggregations, low-to-moderate concurrency

- Choose StarRocks if: Need joins, real-time CDC/updates, high concurrent users

Avoid: Self-hosting Apache Druid. The microservice architecture’s operational burden will destroy a small team.

For Enterprises (Internal BI, Data Warehousing)

Default Choice: Snowflake or BigQuery. The decision is strategic, not technical.

- Choose BigQuery if: GCP-native shop, want seamless integration with Looker/Dataflow/Vertex AI

- Choose Snowflake if: Multi-cloud strategy, need granular compute cost control via Virtual Warehouses

Advanced Pattern: Use StarRocks or Pinot as a high-performance federated query layer on top of your data lake (Iceberg/Hudi), while the CDW provides cold storage and batch processing.

For Real-Time & User-Facing Analytics

This is the most contested and nuanced use case—serving analytics to customers as part of your product.

Choose Pinot if: Powering high-traffic user-facing dashboards (100k+ QPS), “Who Viewed My Profile” features, real-time personalization APIs. You can pre-define query patterns and pre-aggregate at ingestion for unbeatable P99 latency.

Choose StarRocks if: Complex B2B SaaS dashboards requiring multi-table joins, thousands of concurrent users, and real-time updates. The only database combining low-latency, high-concurrency MPP with sophisticated join optimization and native updates.

Choose ClickHouse if: Internal real-time observability, APM, log analytics. Fastest raw-scan performance on single tables, best compression, perfect for controlled internal tools with lower concurrency.

The Bottom Line

The OLAP market has fragmented into specialized tools. There is no “best” database—only the right archetype for your workload. The most expensive mistake isn’t choosing between vendors within an archetype. It’s choosing the wrong archetype entirely.

Match your workload to the architecture, not the marketing hype.

Note: This analysis is based on the 2024-2025 OLAP Database Report, a comprehensive technical comparison of ClickHouse, Apache Druid, Apache Pinot, StarRocks, DuckDB, Snowflake, and BigQuery across architecture, performance benchmarks, and real-world use cases.