AI systems that can perceive, reason, plan, and act autonomously are no longer just science fiction. In 2025, organizations around the world are deploying autonomous agents to handle everything from email summaries and customer support tickets to competitive research and complex data analysis. These systems promise enormous productivity gains, but they also raise important questions about trust and control.

Here’s a striking statistic: according to Capgemini’s 2025 research report “Rise of Agentic AI,” only 27% of organizations trust fully autonomous AI agents, down from 43% just one year earlier. Much of this confusion stems from the term “data agent” itself, which has been applied to everything from simple SQL chatbots to sophisticated multi-agent orchestration systems. Without a clear vocabulary, it becomes nearly impossible to set proper expectations, build appropriate guardrails, or design responsible products.

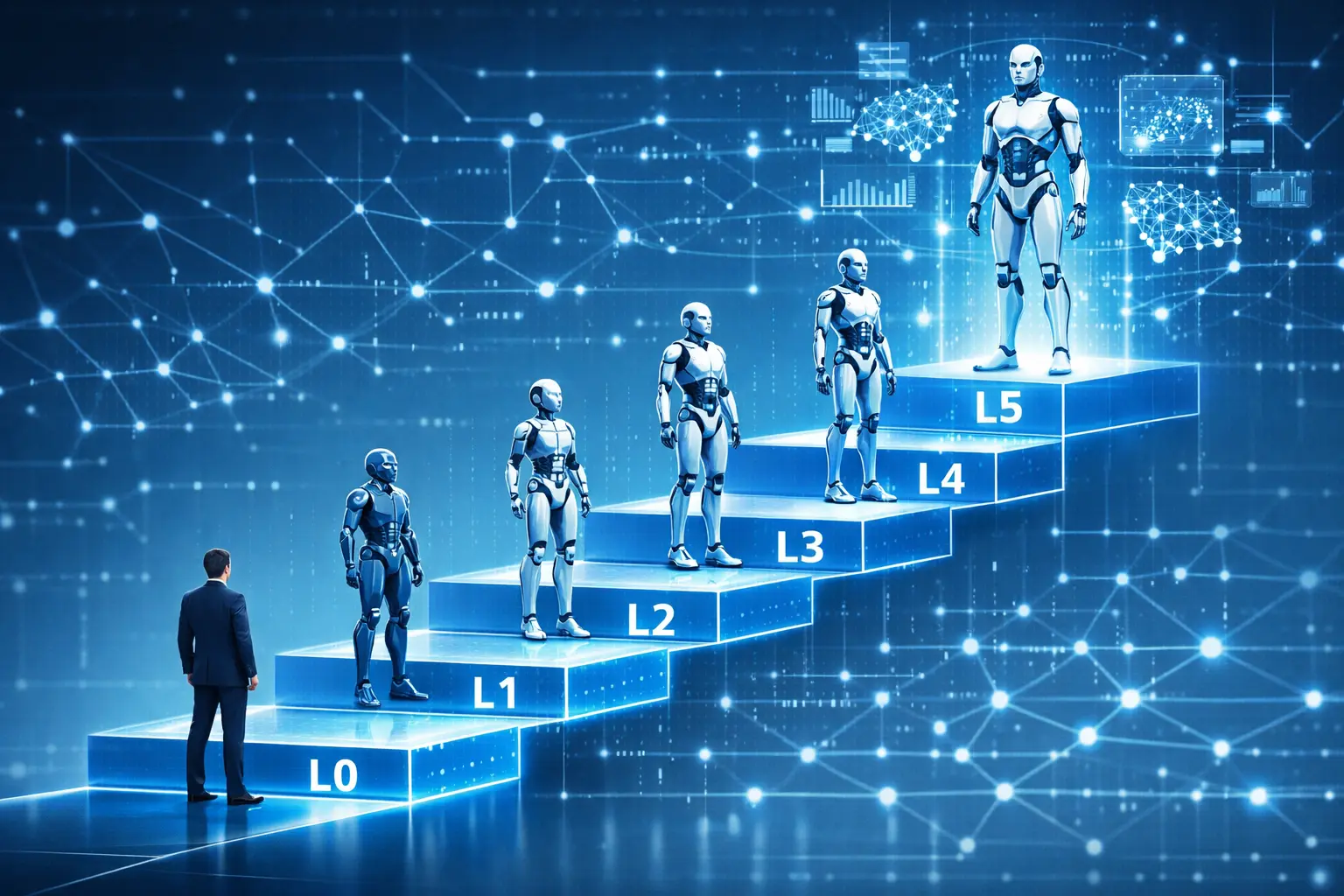

Researchers at HKUST and Tsinghua University tackled this problem head-on by proposing a six-level hierarchy (L0–L5) for data agents. This taxonomy, inspired by the well-known SAE driving automation scale used in autonomous vehicles, focuses on how much autonomy a system has and what role humans play at each stage. Let’s break down what each level means and why it matters.

The L0–L5 Data Agent Hierarchy Explained

The HKUST/Tsinghua framework establishes six distinct levels of data agent autonomy. Each level represents a significant shift in the balance between human control and AI independence. Understanding these levels helps organizations choose the right type of agent for their needs and set appropriate expectations.

Level 0: Manual Operations

At the base of the hierarchy, there’s no agent at all. Data tasks like extraction, cleaning, and analysis rely entirely on human experts. Any automation is limited to deterministic scripts that don’t adjust to context. The human determines the workflow, executes every step, and monitors all results. Think of traditional ETL pipelines and spreadsheets—there’s no AI reasoning or adaptation happening here.

Level 1: Assisted Intelligence

An L1 data agent works like a helpful intern. It can answer questions, translate natural language to SQL (NL2SQL), or summarize tabular data, but the human still defines the problem and verifies every output. Agents at this level perform reactive tool calls—they respond to user prompts without any long-term planning or memory. Examples include TableQA systems and GitHub Copilot-style code suggestions. This represents the first evolutionary leap: moving from purely manual operations to AI-assisted intelligence.

Level 2: Partial Autonomy

Level 2 systems begin to perceive their environment. They can call external APIs, search databases, and adapt their workflows based on real-time feedback. The human still orchestrates tasks, but the agent can choose which tool to use and adjust parameters on its own. AutoSQL agents that optimize queries using feedback loops or data cleaning bots that adapt their operations represent this level. The key difference from L1 is environmental perception and adaptive tool selection.

Level 3: Conditional Autonomy

At L3, the agent becomes the dominant executor while the human shifts to a supervisory role. These agents can plan multi-step workflows, decide execution order, and handle branching logic independently. They monitor their own progress and determine when to ask for human approval. If something goes wrong, they can roll back and adjust their plan. Data science platforms that orchestrate entire ETL pipelines and produce dashboards exemplify this level. Humans approve final actions, but the heavy lifting is done by the agent.

Level 4: High Autonomy

Level 4 agents are proactive rather than reactive. They monitor data systems continuously, diagnose anomalies, update models, and self-recover from errors with minimal human intervention. These agents have persistent memory and state—they store context, reason across sessions, and adjust their goals over time. Human involvement becomes necessary only when the agent encounters conditions outside its operational design domain. Autonomous observability agents that detect problems and fix data quality issues with minimal oversight represent this level.

Level 5: Full Autonomy (Generative)

The highest tier envisions agents that are truly self-governing. They set their own objectives, design data analysis workflows, create new tools, and collaborate with other agents—all without explicit programming. These agents demonstrate generative intelligence: they can innovate new methods and even create entirely new paradigms. This level remains aspirational. No production systems exist at L5 today, and major research challenges remain before we get there.

Data Agent Levels at a Glance

| Level | Autonomy & Human Role | Agent Capabilities | Real-World Examples |

|---|---|---|---|

| L0 – Manual | Human is dominator; agent absent | No reasoning or tool use; deterministic outputs | Manual ETL pipelines, spreadsheets |

| L1 – Assisted | Human is primary integrator; agent assists | Models answer queries but lack memory or planning | NL2SQL assistants, TableQA systems, Copilot-style suggestions |

| L2 – Partial | Human orchestrates; agent executes | Agent adapts execution, calls external tools, manages small pipelines | AutoSQL agents, DataGPT, adaptive data cleaning bots |

| L3 – Conditional | Human supervises; agent dominates tasks | Plans multi-step workflows, handles dependencies, requests approval | Agentic data science platforms, automated ETL with dashboards |

| L4 – High | Human is onlooker; agent is proactive | Persistent memory, internal goals, self-recovery from failures | Autonomous observability agents, proactive anomaly detection |

| L5 – Generative | Human absent; agent sets own objectives | Innovates new methods, designs workflows, collaborates with other agents | Aspirational—not yet realized |

The Evolutionary Leaps Between Levels

The HKUST/Tsinghua survey identifies specific evolutionary leaps required to progress through the hierarchy. Understanding these transitions helps explain why moving up the autonomy ladder is so challenging.

From L0 to L1 (Assisted Intelligence): The system gains basic reasoning and natural language understanding. Manual operations become augmented by tool-based assistance for the first time.

From L1 to L2 (Perception): Agents acquire sensors in the form of API connectors and database access. They can now perceive their environment, enabling adaptive tool calls and context-aware behavior.

From L2 to L3 (Task Dominance Transfer): Control shifts from human to agent. The agent plans and executes workflows while humans supervise and intervene only when necessary. This is a significant handover of responsibility.

From L3 to L4 (Supervision Removal): Agents gain persistent memory and fault tolerance, allowing them to operate over extended periods and handle errors autonomously. Human oversight becomes occasional rather than constant.

From L4 to L5 (Innovation): Agents become generative, innovating new methods and coordinating with other agents. They’re no longer limited to predefined tools or goals—they can create entirely new approaches.

How Data Agent Levels Compare to Other Autonomy Frameworks

The HKUST/Tsinghua hierarchy isn’t the only attempt to measure agent autonomy. Several other frameworks offer complementary perspectives, each emphasizing different aspects of the autonomy question.

Capability-Focused Frameworks

Bessemer Venture Partners Scale (L0–L6): The prominent venture capital firm proposes seven levels for AI agents, ranging from no agency (manual) to agents managing teams of other agents. Their framework emphasizes the progression toward multi-agent coordination:

| BVP Level | Description |

|---|---|

| L0 | No agency (manual operations) |

| L1 | Chain-of-thought reasoning for code suggestions |

| L2 | Conditional co-pilot with human approval |

| L3 | Reliable multi-step autonomy |

| L4 | Fully autonomous job performance |

| L5 | Teams of agents working together |

| L6 | Agents managing teams of agents |

This scale partially aligns with the data agent hierarchy’s focus on multi-agent collaboration at higher levels, though BVP extends further into agent-of-agents territory.

Vellum’s Six Levels of Agentic Behavior: Vellum AI categorizes agents from rule-based followers (L0) to creative agents (L5). Their L2 emphasizes tool use, L3 adds planning and acting, L4 introduces persistent state and self-triggering, and L5 supports creative logic and tool design. This progression closely mirrors the data agent hierarchy while highlighting the importance of memory and creativity.

HuggingFace Star Rating: This developer-oriented framework assigns zero to four stars based on control over program flow. A zero-star agent is a simple processor; four stars indicates fully autonomous code generation. The data agent L1 corresponds roughly to one star, L2 to two stars, L3 to three stars, and L4/L5 to four stars. However, this framework ignores human interaction and risk considerations.

Interaction-Focused Frameworks

Knight First Amendment Institute’s User-Centric Levels: This framework defines five levels based on the user’s role rather than technical capability:

| Level | User Role | Description |

|---|---|---|

| L1 | Operator | User fully controls planning and decisions; agent provides on-demand assistance |

| L2 | Collaborator | User and agent share planning; fluid control handoffs |

| L3 | Consultant | Agent takes the lead; consults user for expertise and preferences |

| L4 | Approver | Agent operates independently; requests approval for high-risk situations |

| L5 | Observer | Agent fully autonomous; user can only monitor or activate emergency stop |

This model emphasizes human control and consent rather than technical capability. Data agent L3 aligns with “approver” (human approves actions), while L4–L5 align with “observer” (agent acts autonomously under broad supervision). The Knight framework also proposes autonomy certificates and governance mechanisms for multi-agent systems.

AWS Staged Autonomy Model: AWS categorizes production agents into four levels: predefined actions, dynamic workflows, partially autonomous, and fully autonomous. Notably, most deployed agents in 2025 operate at levels 2–3, similar to L2 and L3 in the data agent hierarchy. Full Level 4 autonomy remains rare in production environments, underscoring the gap between research capabilities and real-world adoption.

Maturity-Focused Frameworks

Capgemini’s 2025 Maturity Scale: This framework proposes six points ranging from no agent involvement (Level 0) to fully autonomous, self-evolving systems (Level 5). Their definitions emphasize human involvement and process integration:

| Level | Description |

|---|---|

| 0 | No agent involvement |

| 1 | Deterministic automation |

| 2 | AI-augmented decision-making |

| 3 | AI integrated into business processes |

| 4 | Independent operation by multi-agent teams |

| 5 | Full execution authority with self-evolution |

According to Capgemini’s research, only 15% of business processes are expected to operate at Level 3 or higher autonomy within the next year, signaling that most organizations remain cautious about deploying fully autonomous systems.

Framework Comparison Summary

| Framework | Focus | Number of Levels | Key Differentiator |

|---|---|---|---|

| HKUST/Tsinghua (Data Agents) | Data tasks & autonomy | 6 (L0–L5) | SAE-inspired, data-specific taxonomy |

| Bessemer Venture Partners | Investment maturity | 7 (L0–L6) | Multi-agent and agent-of-agents focus |

| Vellum | Agentic behavior | 6 (L0–L5) | Creativity and tool design emphasis |

| Knight Institute | User interaction | 5 (L1–L5) | User-role-centric, governance focus |

| AWS | Production deployment | 4 levels | Real-world deployment readiness |

| Capgemini | Business maturity | 6 (L0–L5) | Enterprise process integration |

Real-World Adoption: Where Are We Today?

Despite the challenges, data agents are already delivering measurable value across multiple industries. Here’s what the numbers tell us:

Customer Service and IT Operations: Salesforce’s Agentforce platform handled over 1 million support requests in early 2025 with 93% accuracy. The company projects $50 million in annual cost savings from this deployment. Their service agent has resolved the majority of cases without human intervention, while an SDR (Sales Development Rep) agent generated $1.7 million in new pipeline from dormant leads.

Current Adoption Levels: According to Capgemini’s research, 2% of organizations have deployed AI agents at scale, 12% at partial scale, 23% have launched pilots, and 61% are still exploring deployment options. Most deployments remain at early stages of autonomy—only 15% of business processes operate at semi-autonomous to fully autonomous levels today, though this is expected to rise to 25% by 2028.

Economic Potential: Capgemini projects that AI agents could generate up to $450 billion in economic value by 2028 through revenue growth and cost savings across surveyed markets. However, this potential comes with a significant caveat: trust in fully autonomous agents has actually declined over the past year.

Infrastructure Readiness: The research reveals that 80% of organizations lack mature AI infrastructure, and fewer than one in five report high levels of data readiness. Ethical concerns around data privacy, algorithmic bias, and lack of explainability remain widespread barriers to adoption.

Challenges and Research Frontiers

While the L0–L5 hierarchy provides a useful roadmap, significant challenges remain before higher-level agents become practical:

Reliability and Error Compounding: Even a 95% success rate per step results in only about 60% success across ten steps. This mathematical reality necessitates bounded autonomy with human checkpoints, especially for complex, multi-step workflows.

Long-term Reasoning and Self-Correction: Today’s agents struggle to plan effectively in uncertain environments, recover gracefully from failed API calls, or adapt to changing web content. These limitations keep most systems at L2–L3 levels.

State Persistence and Memory: High autonomy requires agents to remember context across sessions and tasks. This capability is only beginning to emerge in production systems and remains technically challenging at scale.

Multi-Agent Coordination: Research shows that coordinating teams of agents yields measurable but small gains. Multi-agent systems must manage communication, delegation, and conflict resolution—problems that become exponentially complex as the number of agents grows.

Trust, Identity, and Compliance: Organizations must build ethical AI practices, redesign processes, and strengthen data foundations to earn user trust. Agent-to-agent trust models require cryptographic proofs and continuous risk scoring. Governance frameworks like Gaia-X and eIDAS 2.0 are working to embed verifiable machine identities into agent workflows.

What This Means for Organizations

The L0–L5 data agent hierarchy provides a practical vocabulary for discussing AI autonomy. It clarifies what capabilities are expected at each level, how human involvement changes, and what evolutionary leaps are required to progress. Here are key takeaways for different stakeholders:

For Technology Leaders: Most organizations should currently focus on L1–L3 deployments, which combine AI assistance with meaningful human oversight. Full L4 autonomy is rare in production, and L5 remains aspirational.

For Product Teams: Understand that autonomy is a design choice, not an inevitable outcome of increasing capability. The Knight Institute framework emphasizes that developers can deliberately calibrate autonomy levels independent of technical sophistication.

For Risk and Compliance Teams: Different autonomy levels imply different liability and governance requirements. As the HKUST/Tsinghua framework suggests, responsibility boundaries should be clearly defined at each level.

For AI Researchers: The evolutionary leaps between levels point to specific technical challenges worth solving: improved error recovery, persistent memory systems, multi-agent coordination protocols, and trust mechanisms.

The road to L4 and L5 is long and filled with challenges around reliability, memory, coordination, and trust. Yet the potential rewards are substantial: improved productivity, new discoveries, and genuinely intelligent partners for human workers. By building governance into the architecture and progressing through the hierarchy responsibly, organizations can ensure that data agents revolutionize autonomy without sacrificing safety or human values.

Sources:

- A Survey of Data Agents: Emerging Paradigm or Overstated Hype? - arXiv (HKUST/Tsinghua)

- GitHub - HKUSTDial/awesome-data-agents

- Rise of Agentic AI - Capgemini Research Institute

- Bessemer’s AI Agent Autonomy Scale - Bessemer Venture Partners

- LLM Agents: The Six Levels of Agentic Behavior - Vellum

- Levels of Autonomy for AI Agents - Knight First Amendment Institute

- The Practical Guide to the Levels of AI Agent Autonomy - Sean Falconer

- 1 Million Support Requests Handled by Agentforce - Salesforce

- Why Autonomous Infrastructure is the Future - CNCF